How to fight Google's Core Algo Update 1

| filed under: Core Algorithm Update, SERPs, Algorithm Update, SERP, Substack, Google Core Algorithm, Search Engines, Google Search, Search Engine Optimization, SEO, Google Search Core Update, Core UpdateDisavow Toxic Links (I Call Them Barnacles)

I don’t know what happened to my site, chrisabraham.com. I don’t blog there as much these days so maybe it’s just that Google wants Hot and Fresh Donuts and my personal site was getting a little stale. But I went over to my SEMRush account and checked to see how toxic the backlinks to my site were. I hadn’t looked in years. It was pretty bad. Since you don’t own any site but your own, you don’t control who links to you—or why, how, where, or to what (there are a lot of links to images that didn’t make it from platform to platform over time).

I call them barnacles. You don’t even know they’re there until you don a mask and check your hull. They seem harmless until you realize that they not only mar your boat but their bulkiness affects the hydrodynamic efficiency of your boat through the water, sometimes nuking its speed in knots. Same thing with toxic backlinks!

I spent the next two hours connecting my account to my Google Search Central and Google Analytics account and then downloaded then automagically synced the old Disavow text file into the SEMRush. Next, I went into SEMRush’s backlink audit tool, ran it, and then ran, by hand, through at least a thousand records, disavowing most of them. There was so much garbage. It’s also a treasure-trove as I have been on this domain since 1999 so there are some really old-as-hell links that are still alive.

When I was done. I had disavowed 2,891 domains and 3 URLs, up from 1,400 domains in the previous iteration. So, I was able to scrape all but the most sentimental, high-DA, barnacles, from the hull. Now, I feel like chrisabraham.com slips through the water more sleekly.

Don’t Abandon Your Site for Dead

One of the big problems with my site is that I haven’t been blogging in a couple years. I feel like I burnt myself out, first with Biznology, a site I had been writing for about these things, from January 18, 2011, to November 25, 2019: 8 years, 10 months, 8 days! That, along with launching Newconomy.media, was a lot. So, I guess I slowed, both on the Chris Abraham blog and also the Gerris Corp blog as well. But, you really benefit from making sure you keep your site updated because if you don’t send Sitemap.xml and social signals to Google. Otherwise, they’ll relegate you to archival status. That means much more than second or third string, it means injured reserve list. No bueno.

Sitemaps are Really Very Important in 2021

Now that I mention Sitemaps, or Sitemap.xml to differentiate it from the human-focused sitemap you used to see on sites in the 90s, I should explain what they are and what they do. Remember RSS and ATOM? Sitemaps are the new, universally adopted, machine-formatted, way that Google and Bing can latch onto your site in a more efficient way than sending around bots to spider your side autorandomly. Remember old-school ping lists that Movable Type used to send out to ping servers? Google and Bing are the spiders in this analogy and your Sitemap is the vibrating, struggling, little bug caught in the web, but in a good way.

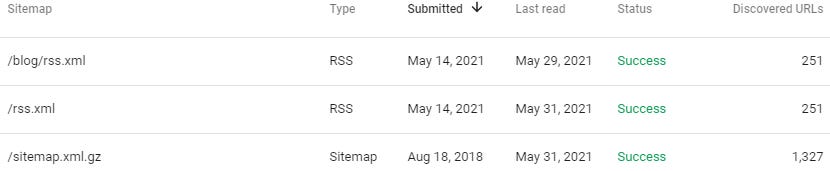

Every time you submit new content, Google and Bing get an automated ping, and they can, immediately, go off onto their web, and gobble up the new information (well, suck its liquified innards). What’s better is that everything Google and Bing need to know is there. And it’s virtually real-time. I just discovered something cool, too: if your site still runs RSS, RSS2, and ATOM feeds, you can add those to both Google and Bing and they’ll eat those too. My site is built on an old Plone platform (don’t ask) so the formatting is weird. Generally speaking, most sitemaps look like yourdomain.com/sitemap.xml, my sitemap looks like chrisabraham.com/sitemap.xml.gz instead (a GZ file is Zip compression for Unix and GNU/Linux).

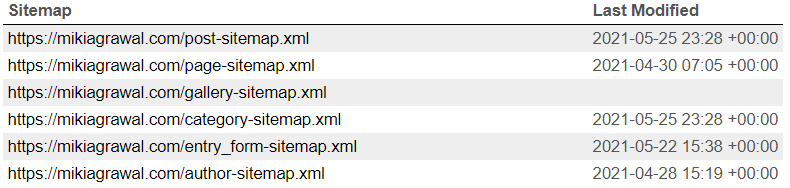

If you use Yoast, it also has some pretty nontraditional Sitemaps. It has a master sitemap, /sitemap_index.xml, and then it has a series of 6 sitemaps within it: /post-sitemap.xml, /page-sitemap.xml, /gallery-sitemap.xml, /category-sitemap.xml, /entry_form-sitemap.xml, and /author-sitemap.xml. This makes up for the lack that the early Sitemaps has, which was that they would only index articles and posts—newsy stuff. Now, however, it includes all the content of your site, so when you change any page and press Publish or Post, Google and Bing will get a Ping. Good stuff. Do it!

Redirect Dead Links to New Content

I thought that the only reason to care about 301 redirects was after you moved from one web platform to another, like from WordPress to Squarespace or Squarespace to WordPress (I have done these moves a lot lately, for my UpWork clients); but no! I have discovered, during my search-and-destroy mission against Toxic Backlinks, that I haven’t always used the same platform myself. I have used Pyra, early Blogger, Greymatter, Plone, Hostnuke, WordPress, and now Plone again.

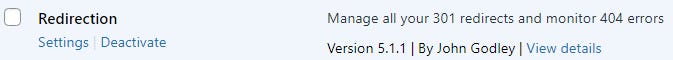

There are two decades of some very old links to my site and my content which I want to keep but for which the target content doesn’t exist anymore. So, always check your 404 logs to see what’s going on. Google hates throwing visitors to Not Found pages, even if they’re super-witty. Also, redirecting everyone with a 404 to the index page isn’t nearly elegant enough for Google. So, make sure you make everything as elegant as humanly possible. I really like the basic WordPress plugin, Redirection, for this sort of thing. It’s so simple but actually really elegant.

Remove all Off-Site-Loading Content

The main page on my Chris Abraham site was hitting Javascript slowdowns and general sluggishness that didn’t make sense. Until I realized that there were Speed Vampires on my home page! Images and Applets that lived on my Home Page that didn’t live on my server but lived as external services on Other People’s Boxen! These guys!

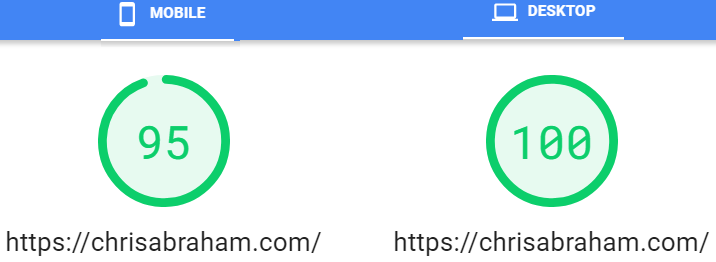

Luckily, I could mess with the settings on the Social Sharing plugin and make sure that the above buttons only rendered on Posts and not the Pages, so it’s fixed. And since nobody cares about my terrible, spotty, periodic, self-absorbed, podcast, ChrisCast, I removed the little icons I was too lazy to copy local; instead, I just keep them on the ChrisCast Podcast page in a sidebar. I don’t care about getting speed-checked there. So, now my site is wicked fast. At least, the Main, Index, Page:

I Am Out of Steam (This’ll be a 3-Parter)

Here’s what I didn’t get to (as a teaser for the fact that I am running out of time and I am losing my flow and falling out of the zone). This was supposed to be quick but, I guess, I kept adding sections. So, here’s a sneak peek as to what I never quite got to:

-

Remove Sharing JavaScript Buttons from Home Page

-

Offshore Your Site to CloudFlare Free CDN

-

Remove Popups, Email Overlays, and Popovers

-

Make Your Site as Static as Possible

-

Cache as Hard as You Can

-

Optimize Your Images and Photos for Web

-

Auto Minify Your JavaScript, CSS, and HTML

-

Make Each Page Painfully Unique (No Duplicates)

-

Make Your Site Deeper than Shallower

-

Give Attention to SEO Titles and Descriptions

Thanks for coming and please consider subscribing to my Substack so that you won’t miss the forthcoming part 2 and part 3 (and maybe part 4, there’s a lot of bullets up there!)

UPDATE: I have written How to fight Google's Core Algo Update (Part 2) so please check it out!